What is Parallel Computing?

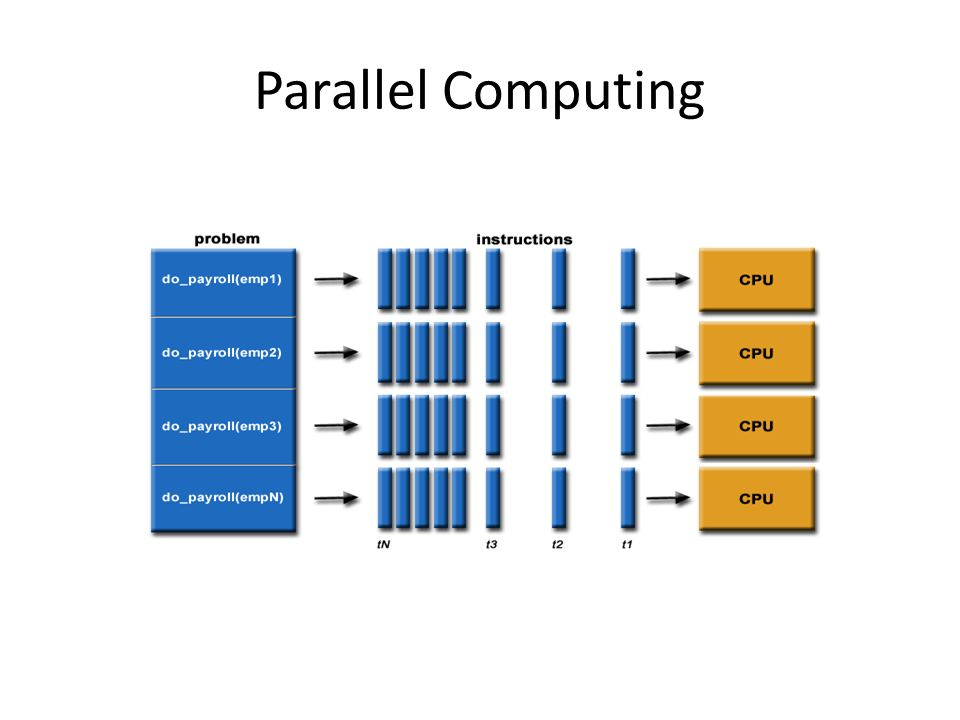

Parallel computing is an architecture in which multiple processors execute different, smaller calculations simultaneously, all derived from a larger, more complex problem.

ad

What is Parallel Computing?

Parallel computing involves breaking down larger problems into smaller, independent, often similar parts that can be executed simultaneously by multiple processors, which communicate through shared memory. The results are then combined as part of an overall algorithm. The primary objective of parallel computing is to boost computational power for faster application processing and problem-solving.

Parallel computing infrastructure is usually located within a single data center, where multiple processors are installed in server racks. The application server distributes computation requests in small chunks, which are then executed simultaneously on each server.

There are four main types of parallel computing, available from both proprietary and open-source vendors: bit-level parallelism, instruction-level parallelism, task parallelism, and superword-level parallelism.

ad

- Bit-level parallelism: Increases processor word size, reducing the number of instructions needed to perform operations on variables larger than the word length.

- Instruction-level parallelism: In hardware-based dynamic parallelism, the processor determines at runtime which instructions to execute in parallel. In software-based static parallelism, the compiler makes this decision.

- Task parallelism: Involves parallelizing computer code across multiple processors, allowing several different tasks to run simultaneously on the same data.

- Superword-level parallelism: A vectorization technique that exploits parallelism in inline code.

Parallel applications are typically classified as fine-grained parallelism, where subtasks communicate several times per second; coarse-grained parallelism, where subtasks do not communicate as frequently; or embarrassingly parallelism, where subtasks rarely or never communicate. Mapping in parallel computing is used to solve embarrassingly parallel problems by applying a simple operation to all elements of a sequence without requiring communication between subtasks.

Why is parallel computing important?

The speed and efficiency of computing have been instrumental, in driving technological advancements over the past fifty years, such as smartphones, high performance computing (HPC) and artificial intelligence (AI) and machine learning (ML). By allowing computers to handle tasks quickly and efficiently parallel computing plays a vital role in facilitating digital transformation, for numerous businesses.

History and development

Interest in parallel computing began as programmers and manufacturers sought ways to create more power-efficient processors. In the 1950s through the 1970s, scientists and engineers developed computers that utilized shared memory and performed parallel operations on datasets for the first time.

This work led to the Caltech Concurrent Computation project in the 1980s, which introduced a new form of parallel computing using 64 Intel processors.

In the 1990s, the ASCI Red supercomputer, with its massively parallel processors (MPPs), achieved a groundbreaking trillion operations per second, marking the start of MPP dominance. Around the same time, clusters—parallel computing systems linking computer nodes over commercial networks—emerged and eventually surpassed MPPs for many applications.

Today, parallel computing, especially through multi-core processors and graphics processing units (GPUs), remains essential in computer science. GPUs are frequently used alongside CPUs to boost data throughput and handle more calculations simultaneously, enhancing many modern business applications.

How does parallel computing work?

Parallel computing involves a variety of devices and architectures, ranging from supercomputers to smartphones. In its most advanced form, it uses hundreds of thousands of cores to address complex challenges, such as discovering new cancer drugs or supporting the search for extraterrestrial intelligence (SETI). Even at its simplest, parallel computing can speed up tasks like sending an email from your phone compared to using serial computing systems.

There are three main architectures in parallel computing: shared memory, distributed memory, and hybrid memory. Each architecture relies on its own message-passing interface (MPI), a standard that defines protocols for message-passing in programming languages like C++ and Fortran. The development of open-source MPI has been crucial in advancing applications and software that utilize parallel computing.

Fundamentals of Parallel Computer Architecture

Parallel computer architecture spans a broad range of parallel computers, classified based on the level of hardware support for parallelism. Effective utilization of these machines depends on both parallel computer architecture and programming techniques. The main classes of parallel computer architectures include:

- Multi-core Computing: This involves processors with two or more separate cores that execute program instructions in parallel. Cores may be integrated on multiple dies within a single chip package or on a single integrated circuit die, and may use architectures such as multithreading, superscalar, vector, or VLIW. Multi-core architectures can be homogeneous, with identical cores, or heterogeneous, with non-identical cores.

- Symmetric Multiprocessing (SMP): This architecture includes two or more independent, homogeneous processors controlled by a single operating system instance, which treats all processors equally. These processors share a single main memory and access all common resources and devices. Each processor has private cache memory and may be connected via on-chip mesh networks, allowing any processor to handle tasks regardless of where the data is stored in memory.

- Distributed Computing: Components of distributed systems are located on different networked computers that coordinate via HTTP, RPC-like connectors, and message queues. Key features of distributed systems are the independent failure of components and component concurrency. Distributed programming is often categorized into client–server, three-tier, n-tier, or peer-to-peer architectures. Distributed and parallel computing overlap, and the terms are sometimes used interchangeably.

- Massively Parallel Computing: This involves using many computers or processors to perform computations in parallel. One approach is to group processors in a tightly structured, centralized cluster, while another approach, grid computing, involves many distributed computers working together and communicating via the Internet.

Other parallel computer architectures include specialized parallel computers, cluster computing, grid computing, vector processors, application-specific integrated circuits, general-purpose computing on graphics processing units (GPGPU), and reconfigurable computing with field-programmable gate arrays. In any parallel computer structure, main memory is either distributed or shared.

Parallel Computing Software Solutions and Techniques

To support parallel computing on parallel hardware, various concurrent programming languages, APIs, libraries, and parallel programming models have been developed. Some notable software solutions and techniques for parallel computing include:

- Application Checkpointing: This technique enhances fault tolerance in computing systems by recording the current states of an application’s variables. In the event of a failure, the application can restore and resume from this saved state. Checkpointing is essential for high-performance computing systems that operate across many processors.

- Automatic Parallelization: This process involves converting sequential code into multi-threaded code to utilize multiple processors simultaneously in a shared-memory multiprocessor (SMP) environment. Techniques for automatic parallelization include Parse, Analyze, Schedule, and Code Generation. Examples of parallelizing compilers and tools include the Paradigm compiler, Polaris compiler, Rice Fortran D compiler, SUIF compiler, and Vienna Fortran compiler.

- Parallel Programming Languages: These languages are generally categorized as either distributed memory or shared memory. Distributed memory programming languages rely on message passing for communication, while shared memory programming languages use shared memory variables for communication.

Difference Between Parallel Computing and Cloud Computing

Cloud computing refers to providing scalable services like databases, data storage, networking, servers, and software over the Internet on an as-needed, pay-as-you-go basis.

These services can be public or private, managed entirely by the provider, and offer remote access to data, work, and applications from any device with an Internet connection. The main service categories are Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

As a relatively new software development paradigm, cloud computing enables broader access to parallel computing through large virtual clusters. This allows average users and smaller organizations to benefit from parallel processing power and storage typically available only to large enterprises.

Difference Between Parallel Processing and Parallel Computing

Parallel processing is a computing method where different parts of a complex task are divided and executed simultaneously on multiple CPUs, thus reducing processing time.

Typically, computer scientists use parallel processing software tools to divide tasks among processors. These tools also handle the reassembly and interpretation of data once each processor has completed its assigned calculations. This can be done through a computer network or a single computer with multiple processors.

Parallel processing and parallel computing often occur together and are frequently used interchangeably. However, parallel processing refers to the number of cores and CPUs operating in parallel, while parallel computing focuses on how software is designed to optimize for this parallel processing setup.

Difference Between Sequential and Parallel Computing

Sequential computing, also known as serial computation, involves using a single processor to execute a program that is divided into a sequence of discrete instructions, with each instruction executed one after the other without any overlap. Traditionally, software has been programmed sequentially, which simplifies the approach but is limited by the processor’s speed and its ability to handle each instruction series. Unlike uni-processor machines that use sequential data structures, parallel computing environments use concurrent data structures.

Measuring performance in sequential programming is generally simpler and less critical than in parallel computing, often focusing on identifying system bottlenecks. In parallel computing, benchmarks are more complex and are assessed using benchmarking and performance regression testing frameworks that employ various measurement techniques, including statistical analysis and multiple repetitions. The challenge of avoiding bottlenecks by efficiently managing data through the memory hierarchy is particularly significant in data science, machine learning, and artificial intelligence applications involving parallel computing.

Sequential computing is essentially the opposite of parallel computing. While parallel computing can be more complex and costly upfront, the benefit of solving problems faster often justifies the investment in parallel computing hardware.

Enterprise benefits of parallel computing

- Cost Reduction: Before parallel computing, serial computing required single processors to tackle complex problems one step at a time, often taking minutes or hours for tasks that parallel computing can complete in seconds. For instance, early iPhones using serial computing might have taken a minute to open an app or email. In contrast, iPhones introduced in 2011 with parallel computing handle these tasks significantly faster.

- Complex Problem Solving: As computing technology advances to address increasingly complex issues, systems need to execute thousands or even millions of tasks simultaneously. Modern machine learning (ML) models rely on parallel computing, which distributes complex algorithms across multiple processors. In contrast, serial computing would cause delays due to its single-threaded nature, performing one calculation at a time on a single processor.

- Faster Analytics: Parallel computing enhances the processing of large datasets, accelerating the interactive queries essential for data analysis. With over a quintillion bytes of information generated daily, companies face challenges in extracting relevant insights. Parallel processing, utilizing computers with multiple cores, can sift through data much faster than serial computers.

- Increased Efficiencies: Parallel computing enables computers to use resources more effectively than those relying on serial computing. Modern advanced computer systems with multiple cores and processors can run multiple programs simultaneously and perform various tasks concurrently, increasing overall efficiency.

Parallel computing use cases

From blockchains and smartphones to gaming consoles and chatbots, parallel computing is integral to many technologies that shape our world. Here are some examples:

- Smartphones: Many smartphones use parallel processing to perform tasks more quickly and efficiently. For instance, the iPhone 14 features a 6-core CPU and a 5-core GPU, enabling it to handle 17 trillion tasks per second—an unprecedented performance level for serial computing.

- Blockchains: Blockchain technology, which supports cryptocurrencies, voting systems, healthcare, and other advanced digital applications, depends on parallel computing to link multiple computers for validating transactions and inputs. This allows transactions to be processed simultaneously, enhancing throughput and scalability.

- Laptop Computers: Modern high-performance laptops, including MacBooks, ChromeBooks, and ThinkPads, utilize multi-core processors as the basis of parallelism. Processors like the Intel Core i5 and HP Z8 enable real-time video editing, 3D graphics rendering, and other demanding tasks.

- Internet of Things (IoT): The Internet of Things (IoT) depends on data from sensors connected to the internet. Parallel computing is essential for analyzing this data to gain insights and manage complex systems such as power plants, dams, and traffic systems. Traditional serial computing is insufficient for the speed required, making parallel computing vital for IoT advancement.

- Artificial Intelligence and Machine Learning: Parallel computing is crucial for training machine learning (ML) models used in AI applications like facial recognition and natural language processing (NLP). It allows simultaneous operations, significantly reducing the time needed to train ML models accurately.

- Supercomputers: Supercomputers are often referred to as parallel computers due to their reliance on parallel computing. For example, the American Summit supercomputer processes 200 quadrillion operations per second to enhance our understanding of physics and the natural environment.

FAQ’s

What is parallel computing?

Parallel computing involves breaking down a larger problem into smaller, independent tasks that can be processed simultaneously by multiple processors. This method increases computational power and speeds up application processing by executing different parts of a problem at the same time and combining the results.

Why is parallel computing important?

Parallel computing is crucial for accelerating processing times and improving efficiency in handling complex problems. It has driven advancements in technology by enabling faster and more effective solutions in fields like smartphones, high-performance computing, artificial intelligence (AI), and machine learning (ML).

How does parallel computing work?

Parallel computing works by dividing a complex problem into smaller tasks that can be executed concurrently on multiple processors. These processors, which may be part of a single machine or distributed across a network, work together to solve the problem more quickly than a single processor could. Key architectures include shared memory, distributed memory, and hybrid memory, each with its own message-passing interface (MPI) for communication.

How does parallel computing differ from sequential computing?

Sequential computing uses a single processor to execute a program step-by-step, one instruction at a time, which can be slower. In contrast, parallel computing uses multiple processors to perform different tasks simultaneously, significantly speeding up the overall process.

What is the difference between parallel computing and cloud computing?

Parallel computing focuses on executing multiple calculations simultaneously to enhance performance, while cloud computing provides scalable services such as databases and software over the Internet on a pay-as-you-go basis. Cloud computing often utilizes parallel computing to offer powerful processing and storage capabilities to users.

How has parallel computing evolved over time?

Parallel computing began with early efforts in the 1950s to improve processor efficiency. Significant milestones include the Caltech Concurrent Computation project in the 1980s and the ASCI Red supercomputer in the 1990s. Today, parallel computing is integral to multi-core processors and GPUs, advancing applications in various fields.

What challenges does parallel computing address?

Parallel computing addresses challenges such as speeding up complex computations, improving efficiency, and handling large volumes of data. It overcomes limitations of serial computing by allowing multiple processors to work on different parts of a problem simultaneously.

Conclusion

Parallel computing has transformed technology by allowing simultaneous processing across multiple processors. This advancement speeds up complex problem-solving and boosts efficiency, impacting everything from smartphones to supercomputers. As technology evolves, parallel computing will remain crucial in driving innovation and addressing increasingly complex challenges.

ad

Comments are closed.