AI Driven Large Language Models Use Cases and Applications

Large Language Models (LLMs), like ChatGPT, have improved considerably during the past few years. They are therefore being used in an increasing variety of applications in numerous industries. LLMs have a plethora of potential uses, from systems that translate languages to chatbots that offer customer support. We will examine some of these use cases and evaluate it’s real world applications they provide for both people and businesses in this article that follow.

ad

What is Large Language Model (LLM)?

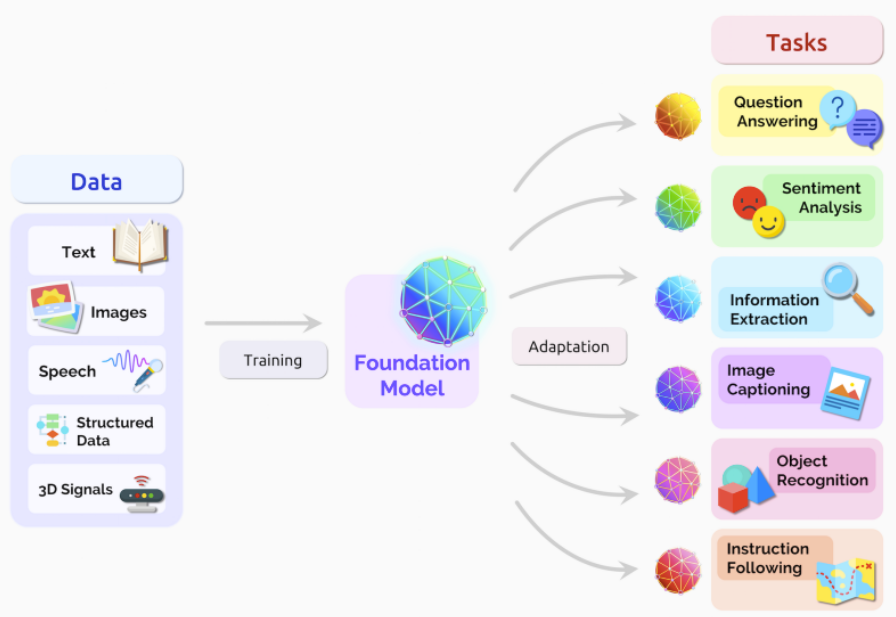

LLMs, or large language models, have recently gained popularity in the field of artificial intelligence. Some of the most intriguing AI applications now being developed, from natural language processing to image production, are being driven by these potent technologies.

GPT-3, short for “Generative Pre-trained Transformer 3,” is one LLM that has recently gained attention. From chatbots to poetry, OpenAI‘s language model has been employed in a variety of projects.

However, what are LLMs and how do they operate? LLMs are essentially AI models that can process enormous volumes of text data and use that data to produce new text. This might be anything from a single phrase to a whole article.

ad

Since LLMs can learn from enormous amounts of data, one of their main advantages is that they can produce more accurate and natural-sounding text than conventional rule-based models. They are extremely versatile since they can adjust to various jobs and environments.

LLMs do have certain difficulties, though. The considerable amount of energy and resources needed to train and operate these models is one of the main problems. Concerns exist over the moral ramifications of employing LLMs to fabricate news or sway public opinion.

Despite these obstacles, LLMs are anticipated to become more crucial as AI applications are developed in the years to come. It’s absolutely worth keeping an eye on this intriguing subject, whether you’re a developer, marketing, or just someone who is interested in the newest technological developments.

Let’s explore the meaning of the acronym LLM and learn what it stands for:

- Large: The term “large” describes the sizeable training data sets and various parameters that LLMs use. Consider the Generative Pre-trained Transformer version 3 (GPT-3), which has over 175 billion parameters and was trained on about 45 TB of text and boasts a large parameter set. This makes it possible for LLMs to have a very broad variety of applications.

- Language: LLMs are primarily concerned with interpreting and processing human language, as indicated by the term “language.”

- Model: This indicates that LLMs are built to find patterns in data and make predictions.

LLMs are linguistic wizards who can examine the links between words to fully comprehend human language. They do this by utilising some extremely innovative methods that the machine learning research community has just just created. Some of the most notable ones are listed below:

- Transformers – These can track correlations between data sequences, enabling them to understand the meaning underlying the data and transform it into different forms. They resemble supercharged deep learning models. They employ self-attention, which enables them to make use of terms they’ve already encountered in the sequence while also learning their definitions. This enables people to understand a text by taking into account its larger context.

- Bidirectional Encoding – This ML architecture employs transformers and scans both sides of a text (i.e., before and after a particular word) to decode one sequence of text into another sequence while understanding the meaning of ambiguous language. It was first discussed in the BERT paper and has subsequently gained popularity.

- Autoregressive Models – These models employ the words that have come before to forecast the words that will come after them. When a model is asked to produce text that is novel but comparable to the training data, they are quite prevalent. Consider them to be linguistic fortune tellers who can forecast words for the future based on the past.

| More: What is Natural Language Processing (NLP)?

How LLM works?

massive Language Models, also known as LLMs, are created by applying deep learning algorithms to massive amounts of text data in order to understand linguistic patterns and evaluate the data. The transformer neural network, which was first developed by Google in 2017, has quickly become the most popular variant of the LLM.

Here’s a high-level overview of how LLMs work:

- Pre-training: LLMs are typically pre-trained on large amounts of text data, such as Wikipedia articles or news articles. During pre-training, the LLM learns to predict the next word in a sequence of text given the previous words.

- Fine-tuning: After pre-training, the LLM can be fine-tuned on a specific task, such as sentiment analysis or machine translation. During fine-tuning, the LLM learns to make predictions based on the specific task and data it is trained on.

- Inference: Once the LLM is trained, it can be used for inference, which involves making predictions based on new inputs. For example, a trained LLM could be used to generate new text, classify text as positive or negative, or translate text from one language to another.

- Word Embeddings: To process text data, LLMs use a technique called word embeddings, which represent each word as a high-dimensional vector. These vectors capture the meaning and context of each word, allowing the LLM to understand the relationships between words in a sentence.

- Attention Mechanisms: LLMs use attention mechanisms to focus on specific parts of a sentence that are most relevant to the task at hand. Attention mechanisms allow the LLM to learn which words in a sentence are most important and which ones can be ignored.

- Decoder and Encoder Layers: LLMs use a series of decoder and encoder layers to process input data. The encoder layers process the input data and generate a sequence of hidden states, while the decoder layers use these hidden states to generate predictions.

- Backpropagation: During training, LLMs use a process called backpropagation to adjust the weights and biases in the neural network. Backpropagation involves computing the gradient of the loss function with respect to the weights and biases, and using this gradient to update the parameters of the network.

LLMs are an effective method for handling and examining text data. LLMs can be used for a variety of use cases in numerous fields by learning linguistic patterns using deep learning methods.

LLM Examples

Several people may already be familiar with a few popular LLMs and LLM-driven applications, such ChatGPT and DALL-E. (which created the header image for this post using a “prompt” for an oil painting of a computer with a chat dialog on the screen). Although there are innumerable additional LLMs available, some of them are proprietary and others are open-source. Additionally, although some LLMs are accessed through APIs as services, others require a software download and integration into your application.

The healthcare, financial, and educational sectors have all made extensive use of these massive language models. For instance, GPT-3 has been used to create chatbots that can offer customer support and service in sectors like banking and retail. For tasks like clinical decision-making and the interpretation of medical images, BERT has been used in the healthcare sector. Language models have been utilized in the education sector to create intelligent tutoring systems that can offer pupils individualized learning opportunities.

Overall, due to their highly developed natural language processing capabilities, large language models have demonstrated considerable promise for revolutionizing a variety of industries.

Large Language Model Use Cases

Large language models have numerous use cases across industries, here are some of the top use cases of large language models:

- Language Translation: Large language models have been used for language translation tasks, allowing for accurate and efficient translations across multiple languages.

- Chatbots: Large language models have been used to create chatbots capable of responding to customer queries and providing assistance.

- Content Generation: Large language models can generate high-quality content, such as news articles, product descriptions, and social media posts.

- Sentiment Analysis: Large language models can analyze the sentiment of text, allowing businesses to monitor brand sentiment and customer opinions.

- Speech Recognition: Large language models have been used to improve speech recognition accuracy and enable voice assistants such as Amazon Alexa and Google Home.

- Question-Answering Systems: Large language models can answer questions based on a given input, making them useful for tasks such as customer service and technical support.

- Text Summarization: Large language models can summarize long pieces of text into shorter, more digestible summaries.

- Language Modeling: Large language models can be used to generate text in a specific style or domain, such as scientific writing or legal documents.

- Content Curation: Large language models can curate content based on a user’s preferences and interests, providing personalized content recommendations.

- Fraud Detection: Large language models can be used to detect fraudulent activity by analyzing text data such as emails, chat logs, and transaction records.

- Personalization: Large language models can personalize user experiences by analyzing user data and providing personalized recommendations, such as product recommendations or content suggestions.

1. Language Translation

One of the most significant and often used uses of large language models is language translation. (LLMs). LLMs are useful tools for language translation because they can analyze and comprehend enormous volumes of text material in various languages.

A text is translated when it is changed from one language to another while keeping its context and meaning. This procedure is now quicker, more precise, and more effective because to LLMs. A broad language model, such as Google’s T5, can translate text between several languages while maintaining the context and original meaning.

In order to create translation systems that can work with specialized subject matter, such as legalese, technical jargon, or medical terminology, LLMs are also employed. These systems can produce extremely accurate and contextually appropriate translations by training LLMs on specialized datasets.

LLMs have been utilized in speech translation, where spoken words are translated in real-time, in addition to conventional text-based translation. Businesses, governmental bodies, and other organizations that need to connect with people from other nations and languages may find this tool to be especially helpful.

Real-world examples of large language models used for language translation:

- Amazon: Amazon uses a custom-built neural machine translation model to translate product listings and customer reviews across multiple languages.

- Microsoft: Microsoft Translator, powered by neural machine translation, is used for real-time language translation in their products such as Office, Skype, and Teams.

- Google: Google Translate is a widely used machine translation service that relies on neural machine translation technology for accurate translations.

- Airbnb: Airbnb uses machine translation to automatically translate host reviews and guest messages into multiple languages, helping to bridge language barriers for their global community.

- eBay: eBay uses machine translation to translate product descriptions and customer reviews across multiple languages, helping to facilitate cross-border trade.

- Uber: Uber uses machine translation to automatically translate driver and rider messages, helping to connect people across language barriers.

- Facebook: Facebook uses machine translation to translate posts, comments, and messages in their platform, making it accessible to a global audience.

- TripAdvisor: TripAdvisor uses machine translation to automatically translate traveler reviews into multiple languages, helping to support a global travel community.

- Booking.com: Booking.com uses machine translation to translate property descriptions and customer reviews across multiple languages, helping to facilitate international travel.

- Expedia: Expedia uses machine translation to translate hotel and travel descriptions across multiple languages, helping to make their platform accessible to a global audience.

- Rakuten: Rakuten uses machine translation to automatically translate product listings and reviews on their global e-commerce platform.

- Alibaba: Alibaba uses machine translation to translate product listings and customer reviews across multiple languages, helping to facilitate cross-border trade.

2. ChatBots

Computer programs known as chatbots use natural language processing (NLP) methods to mimic human communication. LLMs are essential to chatbots because they enable them to comprehend and produce natural language responses. Deep learning algorithms are used by LLMs, which are trained on vast volumes of text data, to provide responses to user queries that resemble those of humans.

LLMs enable chatbots to assess user input and provide pertinent responses. They are able to comprehend the conversation’s context and produce responses that appear to be coming from people. LLMs can also be used to analyze previous talks and learn from them in order to gradually increase the accuracy of chatbots.

LLMs are an essential part of chatbots that allow them to offer users a seamless and customized experience. Chatbots can grasp complicated language patterns and give more precise and pertinent responses by making use of LLMs, which will raise customer happiness and improve efficiency across a variety of businesses.

Real world examples of LLM-based chatbot

- H&M: The clothing retailer uses an LLM-powered chatbot named Kik, which helps customers with outfit suggestions and allows them to browse through the latest collections.

- Domino’s Pizza: The pizza chain’s chatbot, named Dom, uses an LLM to process customer orders and answer any questions they may have about the menu.

- Mastercard: The financial services company uses an LLM-powered chatbot named Kai to help customers with their financial queries and even assist with fraud detection.

- Starbucks: The coffee chain’s chatbot, named My Starbucks Barista, uses an LLM to take customer orders and provide personalized recommendations based on their preferences.

- Pizza Hut: Pizza Hut’s chatbot, named Pizza Hut Assistant, uses an LLM to process customer orders and track their delivery status.

These are just a few examples of how companies are using LLM-based chatbots to enhance their customer service and provide more personalized experiences.

3. Content Generation

“Generative” in the context of a large language model refers to a model’s capacity to produce fresh, coherent writing that adheres to the patterns and structures discovered from a huge corpus of text. Due to their enormous size and capacity to comprehend intricate linguistic links, large language models (LLMs) excel at this task.

Autoregressive generation is a method used by LLMs to create fresh text. A prompt or starting phrase is sent into the model during autoregressive generation, and the next word in the sequence is generated using the probability assigned to each potential following word. Until the desired text length is generated, the process is repeated.

Text completion, text summarization, dialogue creation, and creative writing support are all examples of generative applications of LLMs. LLMs, for instance, can be employed to finish sentences or paragraphs, produce summaries of lengthier texts, or produce natural-sounding dialogue in response to user input. LLMs can also be used to generate fresh text in response to a given prompt or theme, which can serve as recommendations or sources of inspiration for creative writing.

Overall, LLMs’ generative powers offer a wide range of creative and practical uses, and their capacity to produce text that is cohesive and natural-sounding has numerous potential use cases across numerous fields and businesses.

Real-world use cases of LLMs in Content Generation

Google:

- PaLM: an LLM from Google Research that provides several other natural language tasks

- ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately): Pre-training language model optimized for efficiency and accuracy.

- GShard: Large-scale language model designed for parallel training across multiple processors.

- LaMDA: useful for generating text for dialogue with humans, as well as other tasks such as copywriting and translation

- BERT: used for natural language processing tasks such as question answering and language understanding. It is used by Google Search to understand and answer user queries.

- ALBERT (A Lite BERT) : Smaller and more efficient version of BERT used for language translation and text classification.

- Pegasus: Language model optimized for text summarization.

- XLNet (eXtreme Multi-lingual Language model with a Factorized Weighted Transformer by Carnegie Mellon University and Google): Pre-training language model used for tasks such as language translation and text generation.

- T5 (Text-to-Text Transfer Transformer): Large-scale language model trained for various text-based tasks such as summarization and question-answering.

OpenAI:

- GPT-2 (Generative Pre-trained Transformer 2): Language model used for text completion, content generation, and text classification.

- GPT-3 (and ChatGPT), LaMDA, Character.ai, Megatron-Turing NLG: text generation useful especially for dialogue with humans, as well as copywriting, translation, and other tasks

- Codex and Copilot: code generation tools that provide auto-complete suggestions as well as creation of entire code blocks

- DALL-E and Stable Diffusion: generation of images based on text descriptions

Amazon

- Comprehend: a natural language processing (NLP) service that can extract insights from text data such as sentiment analysis, entity recognition, and topic modeling.

- Amazon Polly: a text-to-speech service that can convert written text into lifelike speech in multiple languages.

- Amazon Transcribe: a speech recognition service that can transcribe audio and video recordings into text.

- Amazon Translate: a machine translation service that can translate text from one language to another in real-time.

- Kendra: a search service that uses natural language processing to help users find the information they need within their organization.

- Lex: a service that can build conversational interfaces using voice and text, allowing developers to create chatbots and virtual assistants.

- Amazon SageMaker Autopilot: an automated machine learning service that can automatically build, train, and deploy machine learning models.

- Amazon Textract: a service that uses machine learning to extract text and data from documents such as forms and tables.

- Amazon Rekognition: a computer vision service that can analyze images and videos, including object and scene detection, facial recognition, and emotion detection.

- Amazon Personalize: a service that uses machine learning to personalize product and content recommendations for individual users.

- Amazon Forecast: a machine learning service that can generate accurate forecasts for time-series data.

- Amazon Comprehend Medical: a service that uses machine learning to extract information from medical records such as diagnosis, treatment, and medication information.

- Amazon Fraud Detector: a machine learning service that can detect and prevent online fraud in real-time.

- Amazon CodeGuru: a service that uses machine learning to automatically review code and provide recommendations for improvements.

- Amazon DeepComposer: a machine learning service that can generate music using generative models.

- Amazon Braket: a fully-managed service that allows developers to experiment with quantum computing.

- Amazon Augmented AI (A2I): a service that allows developers to easily build human review systems for machine learning predictions.

- Amazon Lookout for Vision: a service that uses computer vision to detect anomalies in manufacturing and retail processes.

- Amazon Connect: a cloud-based contact center service that can use natural language processing to automate customer interactions.

Microsoft:

- CodeGen: code generation tool that provides auto-complete suggestions as well as creation of entire code blocks

- UniLM: Pre-training language model optimized for a range of NLP tasks.

- Imagen Video: generation of videos based on text descriptions

- Marian: Language model optimized for machine translation.

- ProphetNet: Pre-training language model optimized for sequence-to-sequence tasks such as language translation.

Facebook:

- RoBERTa (Robustly Optimized BERT Pretraining Approach): A variation of BERT used for natural language understanding.

- BART (Bidirectional and Auto-Regressive Transformers): Pre-training language model optimized for language generation tasks.

- CamemBERT: Language model optimized for French language understanding.

- Blender: A chatbot developed using a large-scale language model.

Huawei:

- BLOOM: general-purpose language model used for generation and other text-based tasks, and focused specifically on multi-language support

Anthropic:

- Product focused on optimizing the sales process, via chatbots and other LLM-powered tools

Otter.ai:

- Whisper: transcription of audio files into text

Other examples include:

- GPT-Neo by EleutherAI: Open-source language model based on the GPT architecture.

- CTRL (Conditional Transformer Language Model) by Salesforce: Language model optimized for text generation tasks.

- Hugging Face T5: an LLM used for various natural language processing tasks such as text summarization, classification, and translation

- Salesforce Einstein Language: an LLM used for customer service chatbots, as well as for analyzing customer sentiment and feedback

- Amazon Comprehend: an LLM used for text analysis, including sentiment analysis, key phrase extraction, and language detection.

- Megatron by NVIDIA: Large-scale language model designed for training on multiple GPUs.

4. Sentiment Analysis

To ascertain the writer’s sentiment or opinion about a specific issue or product, sentiment analysis involves locating and extracting subjective information from textual data, such as online reviews or social media posts. By learning to classify text into categories like positive, negative, or neutral using a vast amount of text data during training, large language models (LLMs) can be utilized for sentiment analysis.

In order to increase the precision of sentiment analysis, LLMs can extract contextual information from the text, including slang, idioms, and complex grammar. Sarcasm and irony, which are crucial for determining the genuine sentiment of a document, can also be recognized by LLMs.

Numerous fields, including as brand monitoring, customer service, market research, and political analysis, can benefit from sentiment analysis employing LLMs.

Examples of LLM-based sentiment analysis use cases

- Amazon Comprehend: An LLM used for text analysis, including sentiment analysis, key phrase extraction, and language detection.

- Salesforce Einstein Language: An LLM used for customer service chatbots, as well as for analyzing customer sentiment and feedback.

- Google Cloud Natural Language API: An LLM-based API that provides sentiment analysis, entity recognition, and syntax analysis capabilities.

- Hugging Face Transformers: A suite of LLM-based models used for various natural language processing tasks, including sentiment analysis.

- IBM Watson Natural Language Understanding: An LLM-based tool that provides sentiment analysis, entity recognition, and concept extraction capabilities.

These businesses analyze enormous amounts of text data, including social media posts, client comments, and online reviews, to glean insights. This enables them to comprehend the attitudes and opinions of their customers regarding their goods and services and to base business decisions on this information.

5. Speech Recognition

The act of turning spoken language into text is called speech recognition, commonly referred to as automatic speech recognition (ASR). In order to increase the accuracy of transcription, large language models (LLMs) are employed in speech recognition systems. These LLMs help the system comprehend the context and meaning of the spoken words.

As a result of their extensive training on text data, including transcriptions of spoken language, LLMs are able to identify links and patterns between words and sentences. With a better knowledge of language, the LLM can more accurately infer the words or phrases that a speaker might be using from the context and words around them. This improves voice recognition accuracy.

For instance, an LLM-based voice recognition system can consider the context of the discussion while transcribing the uttered phrase “I saw a bear in the park” and accurately translate “bear” rather than a homophone like “bare.”

Voice assistants like Alexa, Google Assistant, and Cortana are powered by LLM-based speech recognition technology from companies like Amazon, Google, and Microsoft. These systems use LLMs to boost speech recognition precision and give consumers a more organic and intuitive experience. LLMs are also utilized for speech-to-text transcription in industries including legal transcription and medical transcription.

Real word examples of LLMs Speech Recognition

There are several companies that are using LLMs for speech recognition, here are a few examples:

- Google: Google has developed an LLM-based speech recognition system that is used in its voice assistant, Google Assistant. It is also used in other Google products such as Google Translate and Google Meet.

- Microsoft: Microsoft uses LLMs in its speech recognition system for its virtual assistant, Cortana, as well as in its speech-to-text service, Azure Speech Services.

- Amazon: Amazon’s voice assistant, Alexa, uses LLMs for speech recognition. It is also used in Amazon Transcribe, which is a service that converts speech to text.

- Apple: Apple uses LLMs in its speech recognition system for Siri, its virtual assistant. It is also used in other Apple products such as dictation in Pages and Keynote.

- Baidu: Baidu, a Chinese search engine, uses LLMs for speech recognition in its virtual assistant, Duer.

LLMs have significantly improved the accuracy of speech recognition systems, making them more efficient and user-friendly.

6. LLM powered Question and Answer

A typical method of communication is Q&A, or question and answer, in which one person poses a query and another responds. The interaction between a user who asks a question and an LLM, which provides an answer based on the query and the data it has been trained on, is referred to as Q&A in the context of a large language model (LLM) like the one I developed.

A typical Q&A session with an LLM comprises the user entering a question in natural language, which the LLM then processes to determine the query’s purpose and produce the relevant response. The LLM uses the knowledge it has gained via training on a huge corpus of material, including books, papers, and other information sources, to produce responses that are pertinent and instructive.

An LLM’s capacity to comprehend and analyze sophisticated and nuanced questions written in plain language is one of its main advantages when used in Q&A scenarios. Since the user can ask questions just like they would if they were speaking to another person, the engagement is more natural and intuitive.

Generally speaking, Q&A with an LLM is a potent instrument for gaining knowledge and information that can be applied in a variety of settings, from customer assistance to educational applications.

LLM-powered Q&A systems use cases

- Google: Google uses an LLM-powered Q&A system in its search engine to provide users with answers to their queries. When a user enters a question in the search bar, Google uses its LLM to understand the intent of the question and generate a relevant response.

- Amazon: Amazon’s Alexa virtual assistant is powered by an LLM and can answer a wide range of questions, from weather forecasts to cooking instructions. Users can ask Alexa a question in natural language and receive a spoken response.

- Microsoft: Microsoft’s Cortana virtual assistant also uses an LLM to understand natural language queries and generate responses. Cortana is available on Windows 10 devices and can answer questions, provide reminders, and perform other tasks.

- IBM Watson: IBM Watson is a platform that uses LLM technology to power a range of applications, including Q&A systems. One example is Watson Discovery, which can help businesses answer customer queries more efficiently by analyzing large volumes of data and generating relevant responses.

- OpenAI: OpenAI, the company that developed the GPT-3 LLM, has created a platform that allows developers to build and deploy their own Q&A systems using the technology. This platform can be used for a wide range of applications, from customer support to educational tools.

7. summarization

Another crucial application of large language models is summarization. (LLMs). It entails the process of creating a succinct and clear summary of a lengthy text while keeping its important details. In situations when a substantial volume of text data needs to be processed rapidly and effectively, such as in news articles, research papers, and legal documents, this task is especially helpful.

Different methods can be used to train LLMs to perform summarization tasks, such as extractive summarization, in which key phrases or sentences are taken from the text and combined to create a summary, or abstractive summarization, in which the model generates a summary by comprehending the key concepts and creating new sentences that condense the same information.

There are numerous uses for summarization in fields like journalism, publishing, and finance. LLMs, for instance, can be used by news organizations to quickly create summaries of breaking news pieces that can then be distributed to their readers via social media or email. LLMs can be used to automatically create research report summaries in the financial industry, giving investors instant access to information on market trends and investment prospects. LLMs can also be used to automatically summarize legal papers, saving legal practitioners the time and effort needed to read through and analyze voluminous quantities of information.

Examples of large language models (LLMs) being used for summarization

- Bloomberg: Bloomberg has used LLMs for summarizing financial news articles, allowing users to quickly grasp key information and make better-informed investment decisions. They have used the GPT-3 model for this purpose.

- Google: Google has used their T5 model to create a new feature in their search engine that provides a summary of a webpage’s content directly in the search results. This allows users to quickly determine if a page is relevant to their search query without having to click through to the site.

- IBM: IBM has used LLMs for summarizing technical documents in the field of computer science. They have used a model called SciBert, which is a variant of the BERT model that has been specifically trained on scientific text.

- LinkedIn: LinkedIn has used LLMs for summarizing news articles related to the job market, allowing users to stay up-to-date on the latest trends and insights. They have used a model called TextRank, which is a graph-based algorithm for summarization.

- The New York Times: The New York Times has used LLMs for summarizing news articles, providing readers with a brief summary of the article’s main points before diving into the full text. They have used a model called BART, which is a variant of the GPT-2 model that has been optimized for language generation tasks such as summarization.

- Reuters: Reuters has used LLMs for summarizing financial news articles, allowing traders and investors to quickly digest the most important information. They have used a model called XLNet, which is a pre-training language model that has been used for tasks such as language translation and text classification.

- Salesforce: Salesforce has used LLMs for summarizing customer feedback, allowing them to quickly identify patterns and insights that can help improve their products and services. They have used a model called T5 for this purpose.

- Tencent: Tencent has used LLMs for summarizing news articles in Chinese, providing readers with a concise overview of the article’s main points. They have used a model called BERT-wwm, which is a variant of the BERT model that has been pre-trained on a large Chinese corpus.

- Twitter: Twitter has used LLMs for summarizing news articles and tweets related to current events, allowing users to quickly catch up on the latest news and developments. They have used a model called BERT for this purpose.

- Uber: Uber has used LLMs for summarizing customer feedback and reviews, allowing them to quickly identify areas where they can improve their service. They have used a model called DistilBERT, which is a smaller and faster version of the BERT model.

8. Language Modeling

Predicting the likelihood of a natural language word sequence is the challenge of language modeling. Language modeling in the context of a large language model (LLM) entails training a neural network to anticipate the following word in a sequence based on the previous words.

The LLM is exposed to a vast corpus of text data during training, including books, papers, and other information sources. With the help of this information, the LLM can learn linguistic patterns like word usage patterns and word relationships.

The LLM may be trained to do a variety of natural language processing tasks, including question answering, text summarization, and machine translation. For instance, while addressing a user’s query, the LLM can create a response based on the question by leveraging its knowledge of the language and the question’s context.

An LLM’s capacity to learn from vast amounts of data and provide responses that are natural and fluid is one of its primary advantages in language modeling. This is made possible by the LLM’s ability to recognize intricate linguistic patterns and produce responses that are contextually pertinent and illuminating through to the deployment of powerful neural network architectures and training methods.

Language modeling is a crucial part of LLM technology and is necessary to enable applications for natural language processing. An LLM can be used to produce responses that are helpful and instructive in a variety of circumstances by comprehending the language and estimating the probability of certain word sequences.

Instances of businesses utilizing language modeling backed by LLM

- Grammarly: Grammarly employs an LLM to analyze and enhance written material. Grammarly is an online writing aid. The LLM is employed to forecast the chance of various word combinations and to offer suggestions for grammar, spelling, and punctuation improvements.

- Google Translate: To translate text from one language to another, Google Translate use an LLM. The LLM can forecast the most likely translation for a given input because it was trained on a lot of parallel text data.

- Siri: Apple’s Siri virtual assistant employs an LLM to comprehend and answer questions posed in natural English. The LLM can produce responses that are pertinent and instructive because it has been trained on a huge corpus of data.

- Facebook: Facebook creates auto-complete suggestions for text input fields using an LLM. The LLM is able to predict the most likely next word given on the input context because it has been trained on a huge corpus of data.

- OpenAI: GPT-3 is an LLM-based language model that OpenAI has created that can provide natural language responses to a variety of stimuli. Numerous applications, including chatbots, language translation, and content creation, can be made with this technology.

- Amazon: Amazon’s virtual assistant Alexa, who can comprehend and answer to natural language inquiries, uses an LLM. The LLM can produce responses that are pertinent and helpful to users because it has been trained on a huge corpus of data.

LLM-powered language modeling, as a whole, is a crucial part of many contemporary technologies, and a wide range of businesses employ it to boost the precision and effectiveness of their natural language processing systems.

9. Language Curation

The process of choosing, compiling, and presenting content in a way that is helpful and pertinent to a certain audience is known as content curation. In the domain of LLM, content curation is utilizing NLP techniques to examine enormous amounts of text data and select the material that is most pertinent and beneficial to a given audience.

There are many ways to curate content using LLMs. An LLM-powered content curation system, for instance, can examine news stories or social media posts to find trends or subjects of interest to a certain audience. The algorithm might then employ this data to suggest posts or articles that are pertinent to the audience’s interests.

LLMs can be used to curate content for particular fields or sectors. Using medical literature as an example, an LLM may be trained to recognize the articles or research studies that are most pertinent to healthcare workers. In accordance with their areas of interest or specialization, the system might then suggest these articles to physicians or nurses.

In the area of e-learning, LLM-powered content curation has another use. Large amounts of educational content can be analyzed using LLMs to pinpoint the resources that are most pertinent to a given subject or learning purpose. This can assist educators and learners in locating the most pertinent tools and resources for a certain subject or course.

In general, LLM-powered content curation is an effective method for locating and presenting information in a way that is pertinent to a certain audience. LLMs can assist in automating and improving the content curation process by analyzing vast volumes of data using natural language processing techniques.

Examples of companies using LLM-powered language curation

- Feedly: Feedly is an online news aggregator that uses LLMs to curate content for its users. The LLMs analyze the content of news articles and social media posts to identify trends and topics that are most relevant to a user’s interests. Feedly then presents this content in a personalized feed for each user.

- Pocket: Pocket is a content curation app that uses LLMs to analyze articles and identify key topics and themes. The app can then recommend related articles to users based on their interests and reading history.

- LumiNUS: LumiNUS is a learning management system used by the National University of Singapore that uses LLMs to curate educational content. The system analyzes course materials and recommends additional resources and materials to students based on their learning objectives and interests.

- Pinterest: Pinterest uses LLMs to curate content and recommend related pins to its users. The LLMs analyze the content of images and identify key topics and themes, allowing the platform to recommend related pins and boards to users.

- TikTok: TikTok uses LLMs to curate content and recommend videos to its users. The LLMs analyze the content of videos and identify key themes and topics, allowing the platform to recommend videos that are relevant and interesting to each user.

LLM-powered language curation is a helpful method for automating and streamlining the content selection process. LLMs can propose relevant material to users based on their interests and preferences by evaluating the text and other media’s content to discover significant themes and subjects.

10. Fraud Detection

Finding fraudulent actions or behaviors in a system or dataset is the goal of fraud detection. In the area of LLM, fraud detection entails employing NLP techniques to examine textual patterns to spot instances of fraud or other suspect behaviour.

LLMs can be applied in a number of methods for fraud detection. For instance, to find instances of fraud or dishonesty, a fraud detection system powered by LLM may examine the text of customer support contacts. The LLM might be trained to spot linguistic patterns, including evasive or deceptive answers to queries, that are frequently connected to dishonest behavior.

Financial services is another industry where LLM-powered fraud detection is put to use. Using LLMs, it is possible to examine text data from financial transactions and spot trends that could be signs of fraud or money laundering. An LLM, for instance, may be trained to spot linguistic patterns encountered frequently in phony bills or financial accounts.

LLMs can also be applied to cybersecurity fraud detection. Network logs or security warnings could be examined by a system powered by LLM to find any unusual patterns of behavior. The LLM may be trained to spot linguistic patterns that are frequently employed in phishing emails and other cyberattacks.

Fraud detection driven by LLM is a strong tool for spotting and stopping fraudulent behavior. LLMs can assist firms in discovering and stopping fraud in a range of fields, including finance, cybersecurity, and customer service, by analyzing textual patterns and spotting instances of questionable behavior.

Real-world examples of companies using LLM-powered fraud detection

PayPal: PayPal uses LLMs to analyze transaction data and identify patterns that may indicate fraudulent activity. The LLMs can detect anomalies in customer behavior, such as unusual purchasing patterns or attempts to use stolen credit cards.

Socure: Socure is a digital identity verification company that uses LLMs to analyze customer data and detect fraud. The LLMs analyze a wide range of data sources, including social media profiles and public records, to build a comprehensive profile of each customer and identify potential fraud.

Square: Square is a payment processing company that uses LLMs to analyze transaction data and detect fraudulent activity. The LLMs can detect patterns of behavior that are indicative of fraud, such as repeated attempts to make purchases with different credit cards.

Mastercard: Mastercard uses LLMs to analyze transaction data and detect fraudulent activity in real-time. The LLMs can detect patterns of behavior that are indicative of fraud, such as unusual purchasing patterns or attempts to use stolen credit cards.

Pindrop: Pindrop is a voice authentication and fraud detection company that uses LLMs to analyze the language patterns of phone calls and detect fraudulent activity. The LLMs can detect patterns of behavior that are indicative of fraud, such as attempts to impersonate a customer or use stolen credentials.

In this way, fraud detection driven by LLM is a strong tool for spotting and stopping fraudulent behavior. LLMs can assist firms in identifying and stopping fraud in a range of fields, including finance, identity verification, and customer service, by studying linguistic patterns and spotting instances of questionable behavior.

11. Personalization

Personalization entails adjusting material or recommendations to a person’s unique preferences and interests. In the domain of LLM, personalization entails utilizing NLP techniques to examine linguistic patterns and pinpoint the subjects and themes that are most pertinent to a specific user.

There are numerous methods to personalize using LLMs. For instance, a customization system powered by LLM might examine the language of articles or product descriptions to pinpoint the major themes and subjects that are most pertinent to a specific user. The LLM may be taught to identify linguistic patterns that are frequently linked to a user’s preferences, such as particular themes or product categories.

Customer service is another industry where LLM-powered personalisation is used. In order to determine the linguistic patterns that are most pertinent to a certain client, LLMs can be used to evaluate the text of customer interactions. Customer service employees may offer more individualized and efficient support if the LLM were trained to understand linguistic patterns that are frequently linked to a certain customer’s requirements or preferences.

LLMs are also useful for personalization in the marketing industry. An LLM-powered system may examine consumer information and pinpoint linguistic patterns that are most likely to appeal to a specific customer demographic. The LLM may be trained to identify linguistic patterns that are frequently linked to a specific client demographic or psychographic profile, enabling marketers to develop more effective and specialized marketing.

LLM-powered personalization is an effective tool for configuring information and recommendations to each user’s unique tastes and interests. LLMs can assist enterprises in providing more individualized and efficient experiences across a multitude of sectors, including content curation, customer support, and marketing, by studying linguistic patterns and selecting subjects and themes that are most pertinent to each user.

Examples of companies using LLM-powered personalization

- Netflix: Netflix uses LLMs to personalize content recommendations for its users. The LLM analyzes a user’s viewing history and searches to identify patterns of language and topics that are most relevant to that user. Based on this analysis, Netflix recommends new movies and TV shows that are likely to be of interest to the user.

- Amazon: Amazon uses LLMs to personalize product recommendations for its customers. The LLM analyzes a customer’s browsing and purchase history to identify patterns of language and product categories that are most relevant to that customer. Based on this analysis, Amazon recommends new products that are likely to be of interest to the customer.

- Spotify: Spotify uses LLMs to personalize music recommendations for its users. The LLM analyzes a user’s listening history and searches to identify patterns of language and music genres that are most relevant to that user. Based on this analysis, Spotify recommends new songs and playlists that are likely to be of interest to the user.

- Google: Google uses LLMs to personalize search results for its users. The LLM analyzes a user’s search history and preferences to identify patterns of language and topics that are most relevant to that user. Based on this analysis, Google provides search results that are tailored to the user’s specific interests and needs.

- Stitch Fix: Stitch Fix is a personal styling service that uses LLMs to personalize clothing recommendations for its customers. The LLM analyzes a customer’s style preferences and feedback on previous clothing selections to identify patterns of language and clothing styles that are most relevant to that customer. Based on this analysis, Stitch Fix recommends new clothing items that are likely to be of interest to the customer.

LLM-powered personalization is an effective tool for configuring information and recommendations to each user’s unique tastes and interests. LLMs can assist enterprises in providing more individualized and effective experiences across a multitude of areas, including entertainment, e-commerce, and fashion, by studying linguistic patterns and identifying subjects and themes that are most pertinent to each user.

ad

Comments are closed.