NVIDIA CUDA Toolkit For Windows

The NVIDIA CUDA Toolkit is a programming environment that allows you to build high-performance GPU-accelerated applications. You will use the CUDA Toolkit to develop, customize, and deploy applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centers, cloud-based architectures, and HPC supercomputers. GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library are also included in the toolkit.

GPU-accelerated CUDA libraries allow for drop-in optimization in a variety of domains, including linear algebra, image and video processing, deep learning, and graph analytics.

Your CUDA apps will be distributed across all NVIDIA GPU families, both on-premises and in the cloud. Scientists and researchers will create applications that scale from single GPU workstations to cloud deployments of thousands of GPUs by using built-in functionality for scaling computations across multi-GPU configurations.

IDE with graphical and command-line tools for debugging, finding GPU and CPU speed bottlenecks, and offering context-sensitive optimization advice. Create programs in a programming language you’re already familiar with, such as C, C++, Fortran, or Python.

Browse online getting started tools, optimization tips, descriptive examples, and work with the increasingly growing developer community to get started.

With this large package, you, firstly, get access to a set of tools for implementing parallel algorithms (using C-like programming languages) and increasing the computing power and overall performance of your systems by directing and managing more efficiently your CPU/GPU.

Secondly, the toolkit’s libraries are powerful utilities, helpful for creating applications for different types of purposes — advanced calculations (involving linear algebra or mathematical operations), signal processing, image processing, or motion tracking.

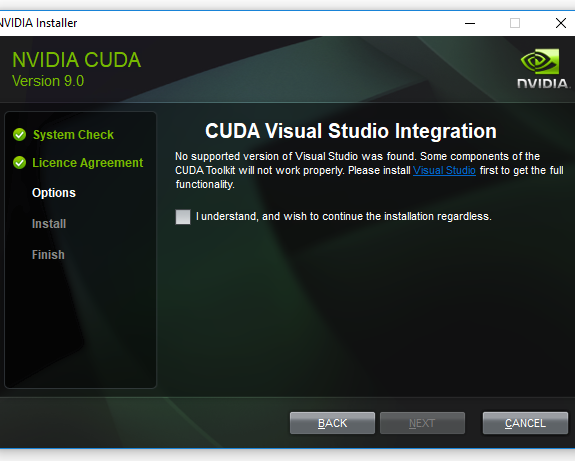

Before trying the actual tools, it is good to know that the NVIDIA CUDA Toolkit includes documentation and an extensive set of samples and compilable resources. Besides that, via the installation process, you also get native integration (via dedicated plugins and NVIDIA Nsight’s system) with Visual Studio and Eclipse.

NVIDIA CUDA Toolkit Features

- GPU Timestamp: Start timestamp

- Method: GPU method name. This is either “memcpy*” for memory copies or the name of a GPU kernel. Memory copies have a suffix that describes the type of a memory transfer, e.g. “memcpyDToHasync” means an asynchronous transfer from Device memory to Host memory

- GPU Time: It is the execution time for the method on GPU

- CPU Time: It is the sum of GPU time and CPU overhead to launch that Method. At driver generated data level, CPU Time is only CPU overhead to launch the Method for non-blocking Methods; for blocking methods it is a sum of GPU time and CPU overhead. All kernel launches by default are non-blocking. But if any profiler counters are enabled kernel launches are blocking. Asynchronous memory copy requests in different streams are non-blocking

- Stream Id: Identification number for the stream

- Columns only for kernel methods

- Occupancy: Occupancy is the ratio of the number of active warps per multiprocessor to the maximum number of active warps

- Profiler counters: Refer the profiler counters section for a list of counters supported

- grid size: Number of blocks in the grid along the X, Y, and Z dimensions are shown as [num_blocks_X num_blocks_Y num_blocks_Z] in a single column

- block size: Number of threads in a block along X, Y, and Z dimensions is shown as [num_threads_X num_threads_Y num_threads_Z]] in a single column

- bDynamic shared memory size per block in bytes

- sta smem per block: Static shared memory size per block in bytes

- reg per thread: Number of registers per thread

- Columns only for memory methods

- mem transfer size: Memory transfer size in bytes

- host mem transfer type: Specifies whether a memory transfer uses “Pageable” or “Page-locked” memory

Screenshots of NVIDIA CUDA Toolkit Software

NVIDIA CUDA Toolkit Gallery

Official Video of NVIDIA CUDA Toolkit Software

Frequently Asked Questions

How Do I Install The Toolkit In A Different Location?

The Runfile installation asks where you wish to install the Toolkit and the Samples during an interactive install. If installing using a non-interactive install, you can use the –toolkitpath and –samplespath parameters to change the install location:

$ ./runfile.run --silent \

--toolkit --toolkitpath=/my/new/toolkit \

--samples --samplespath=/my/new/samples

The RPM and Deb packages cannot be installed to a custom install location directly using the package managers. See the “Install CUDA to a specific directory using the Package Manager installation method” scenario in the Advanced Setup section for more information.

ad

How Can I Tell X To Ignore A Gpu For Compute-only Use?

To make sure X doesn’t use a certain GPU for display, you need to specify which other GPU to use for display. For more information, please refer to the “Use a specific GPU for rendering the display” scenario in the Advanced Setup section.

ad

What Do I Do If The Display Does Not Load, Or Cuda Does Not Work, After Performing A System Update?

System updates may include an updated Linux kernel. In many cases, a new Linux kernel will be installed without properly updating the required Linux kernel headers and development packages. To ensure the CUDA driver continues to work when performing a system update, rerun the commands in the Kernel Headers and Development Packages section.

ad

Additionally, on Fedora, the Akmods framework will sometimes fail to correctly rebuild the NVIDIA kernel module packages when a new Linux kernel is installed. When this happens, it is usually sufficient to invoke Akmods manually and regenerate the module mapping files by running the following commands in a virtual console, and then rebooting:

$ sudo akmods --force $ sudo depmod

ad

How do I install an older CUDA version using a network repo?

Depending on your system configuration, you may not be able to install old versions of CUDA using the cuda metapackage. In order to install a specific version of CUDA, you may need to specify all of the packages that would normally be installed by the cuda metapackage at the version you want to install.

ad

If you are using yum to install certain packages at an older version, the dependencies may not resolve as expected. In this case you may need to pass “–setopt=obsoletes=0” to yum to allow an install of packages which are obsoleted at a later version than you are trying to install.

ad

- Visual Express

- DirectX Library

- GPU-Z Utility

NVIDIA CUDA Toolkit Software Overview

NVIDIA CUDA Toolkit Technical Specification

| Version | 11.3.0 |

| File Size | 2.7 MB |

| Languages | English |

| License | Free |

| Developer | NVIDIA Corporation |

Conclusion

NVIDIA CUDA Toolkit is a helpful resource for learning to build apps and a valuable resource for both beginners and advanced programmers and software testers. Moreover, the package’s components are helpful for any step from the application’s development cycle.

Whether it is planning/structuring an app’s basic architecture and configuration model, testing components/programs, optimizing tools/processes, or deploying something, NVIDIA CUDA Toolkit is perfect for all your needs.

ad

Comments are closed.